The world of infosec is alarmed right now over the recent security vulnerabilities disclosed by Google on Wednesday that affect Intel, AMD, and ARM chips.

The now infamous Meltdown and Spectre bugs allow for the reading of sensitive information from a system's memory, including passwords, private keys and other sensitive information.

Thankfully fixes are being swiftly rolled out to patch these issues, however they come at a performance cost which we will use Prometheus to explore in this blogpost.

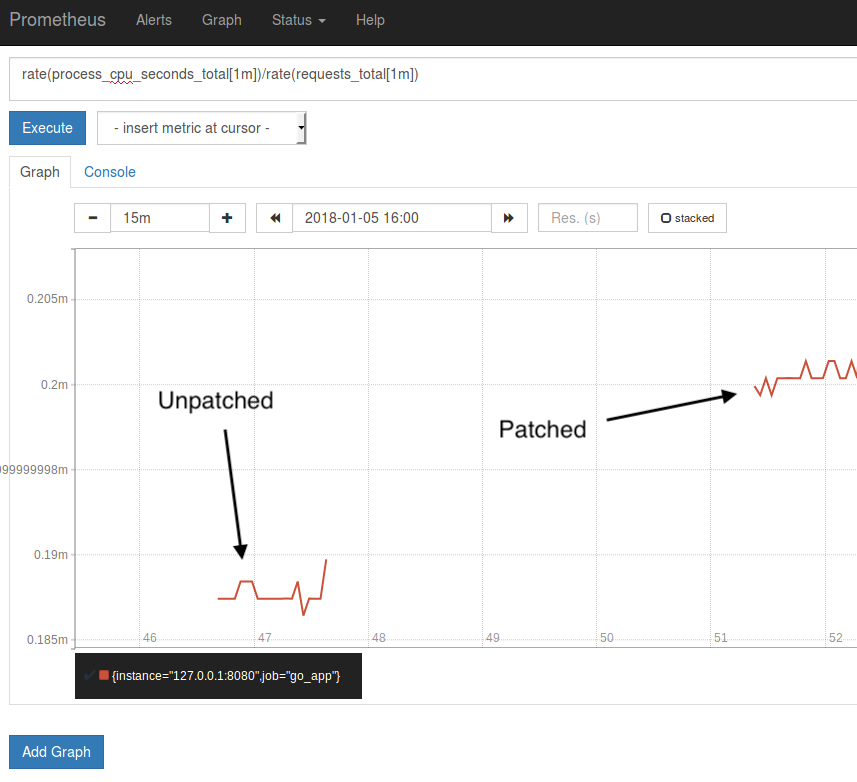

In order to test performance degradation, I tested a patched and unpatched Ubuntu VM, using Prometheus to monitor the process_cpu_seconds_total and requests_total sent to a simple Go application instrumented with the Prometheus client_golang library.

The process_cpu_seconds_total metric comes automatically from the Go client and measures the total CPU usage by the process. In the example code, requests_total was added as a counter, and measures the total number of requests received by the application.

In order to inject load, I used Apache Bench to send 10,000 requests to the application. Finally in order to see the difference I divided the per-second average rate of increase of each time series (rate).

rate(process_cpu_seconds_total[1m]) / rate(requests_total[1m])

This gives the average CPU usage per query, which is on the order of 200 microseconds for this trivial example.

The above graph shows a ~5% increase in time spent on the CPU when the load is injected between the unpatched and patched Ubuntu servers.

This is likely due to the changing of "speculative execution" which is the cause of security flaw but is also an optimisation technique and fundamental part of how modern CPUs operate.

Want to regain some of that performance loss? Contact us.

No comments.